About StreamBench

StreamBench is the first benchmark designed to evaluate the continuous improvement capabilities of large language model (LLM) agents over time. In contrast to traditional benchmarks, which focus on static evaluations of LLMs’ capabilities at a snapshot, StreamBench aims at evaluating the ability of LLM agents to learn and improve iteratively through an input-feedback sequence, reflecting real-world deployment scenarios. Currently, StreamBench contains the following tasks: text-to-SQL generation, python programming, tool use, medical diagnosis, and question answering.

News

- Sep. 26, 2024 StreamBench is accepted by NeurIPS 2024 Datasets and Benchmarks Track! See you in Vancouver!

How StreamBench Works

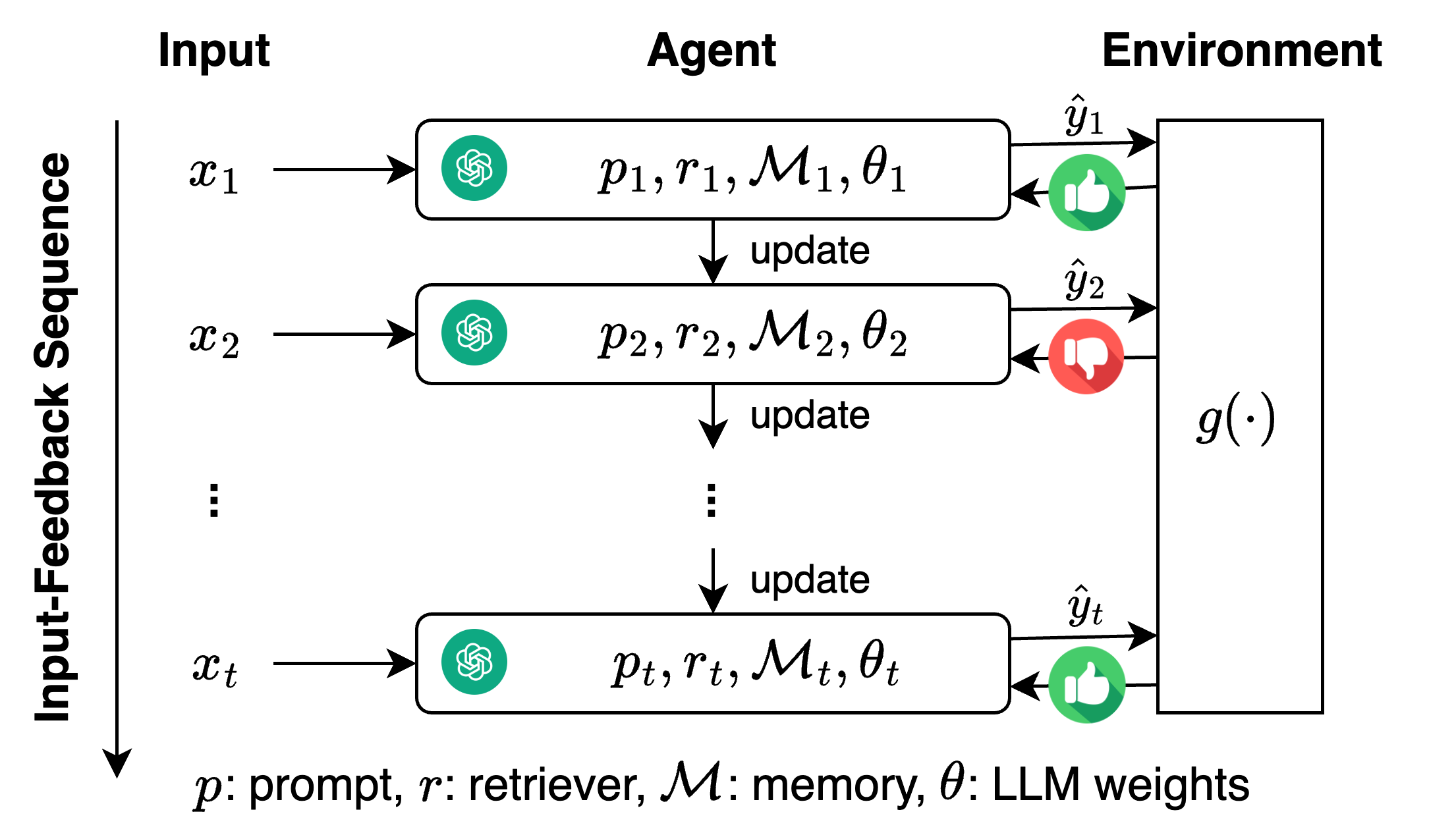

1. Input-Feedback Sequence: A schematic diagram showing the streaming setting of StreamBench, where agents update their components (p, r, M, or θ) from an input-feedback sequence to achieve the highest final accuracy. Benchmark users need to design their own algorithms to update components of their language agents, with the goal to maximize the accuracy of the entire sequence.

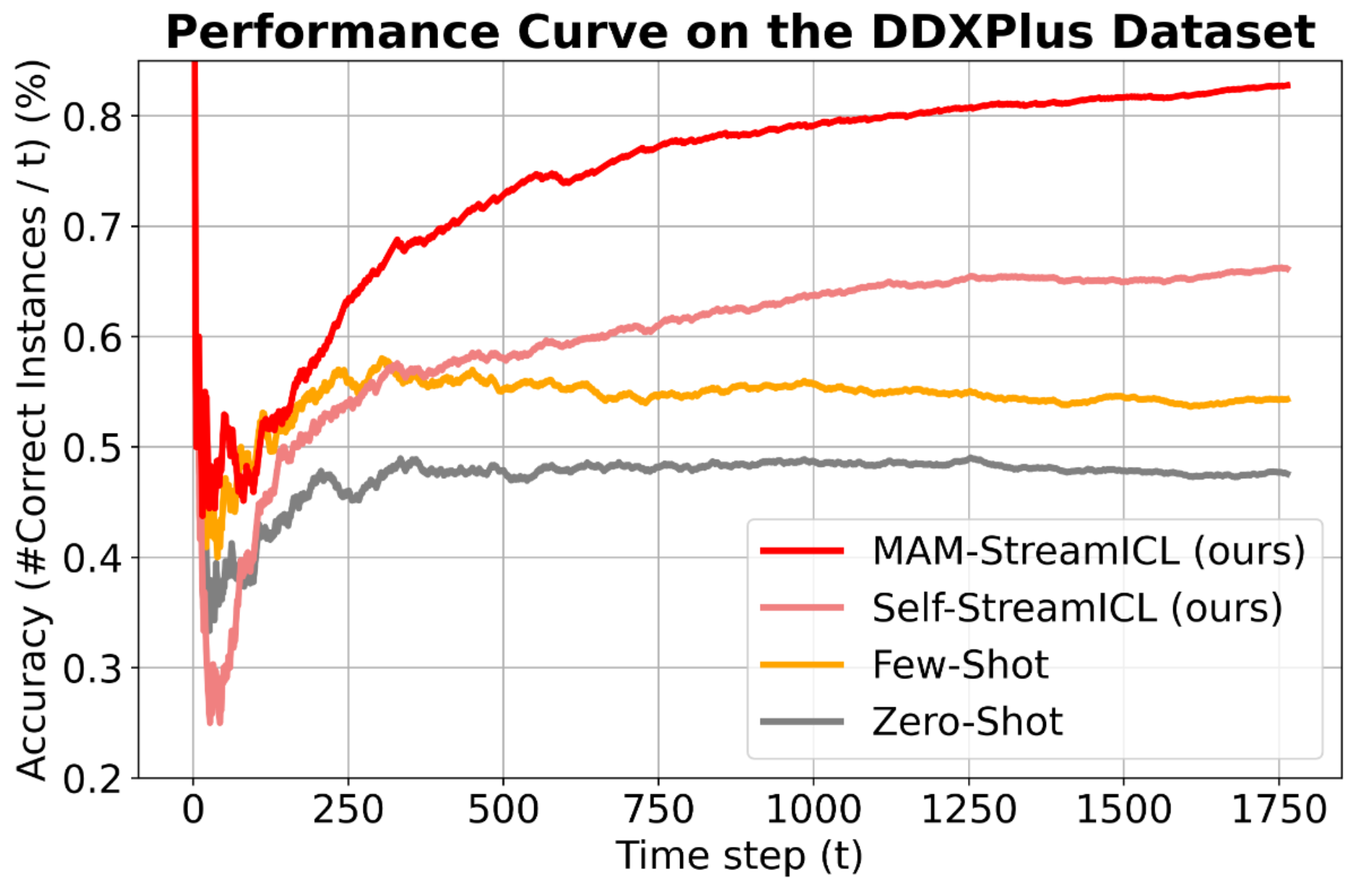

2. Example Task: Performance curve on the medical diagnosis dataset (DDXPlus) on StreamBench. LLM agents are able to gradually improve with our proposed streaming baselines.

Submission

Please follow the Submission Guideline (work in progress)

Citation

@article{wu2024streambench,

title={StreamBench: Towards Benchmarking Continuous Improvement of Language Agents},

author={Wu, Cheng-Kuang and Tam, Zhi Rui and Lin, Chieh-Yen and Chen, Yun-Nung and Lee, Hung-yi},

journal={arXiv preprint arXiv:2406.08747},

year={2024}

}

| Method & LLMs | BIRD | DS1000 | ToolBench | DDXPlus | HotpotQA | |

|---|---|---|---|---|---|---|

| Oct 31, 2024 | Self-StreamICL + gpt-4o Appier AI Research |

42.63 | 59.40 | 76.27 | 92.01 | 67.00 |

| Oct 31, 2024 | Self-StreamICL + gemini-1.5-flash Appier AI Research |

41.20 | 52.20 | 75.07 | 86.34 | 65.20 |

| Oct 31, 2024 | MAM-StreamICL (gpt-3.5-turbo + gemini-1.0-pro + claude-3-haiku) Appier AI Research |

36.68 | 43.10 | 75.87 | 83.50 | 55.20 |